Justin Barry

ML research engineer & applied scientist building intelligent systems

Former Applied Scientist @ Amazon • MS Computer Science • BS Math+CS

Machine Learning Applied Scientist and Research Engineer. Former Amazon ML Scientist (Prime Video).

I design and ship machine learning systems—generative and discriminative—across vision, language, and structured data. I own the math and the PyTorch. I build architectures from first principles when off-the-shelf fails, and I build agentic systems: multi-agent LLM pipelines that generate, critique, and rank.

My edge is messy problems. When baselines don't work and the objective isn't obvious, I turn ambiguity into a clear loss function and a system you can deploy.

I work remotely as an embedded research engineer—either as a full-time hire or via my LLC on longer-term engagements and scoped projects.

Featured Projects

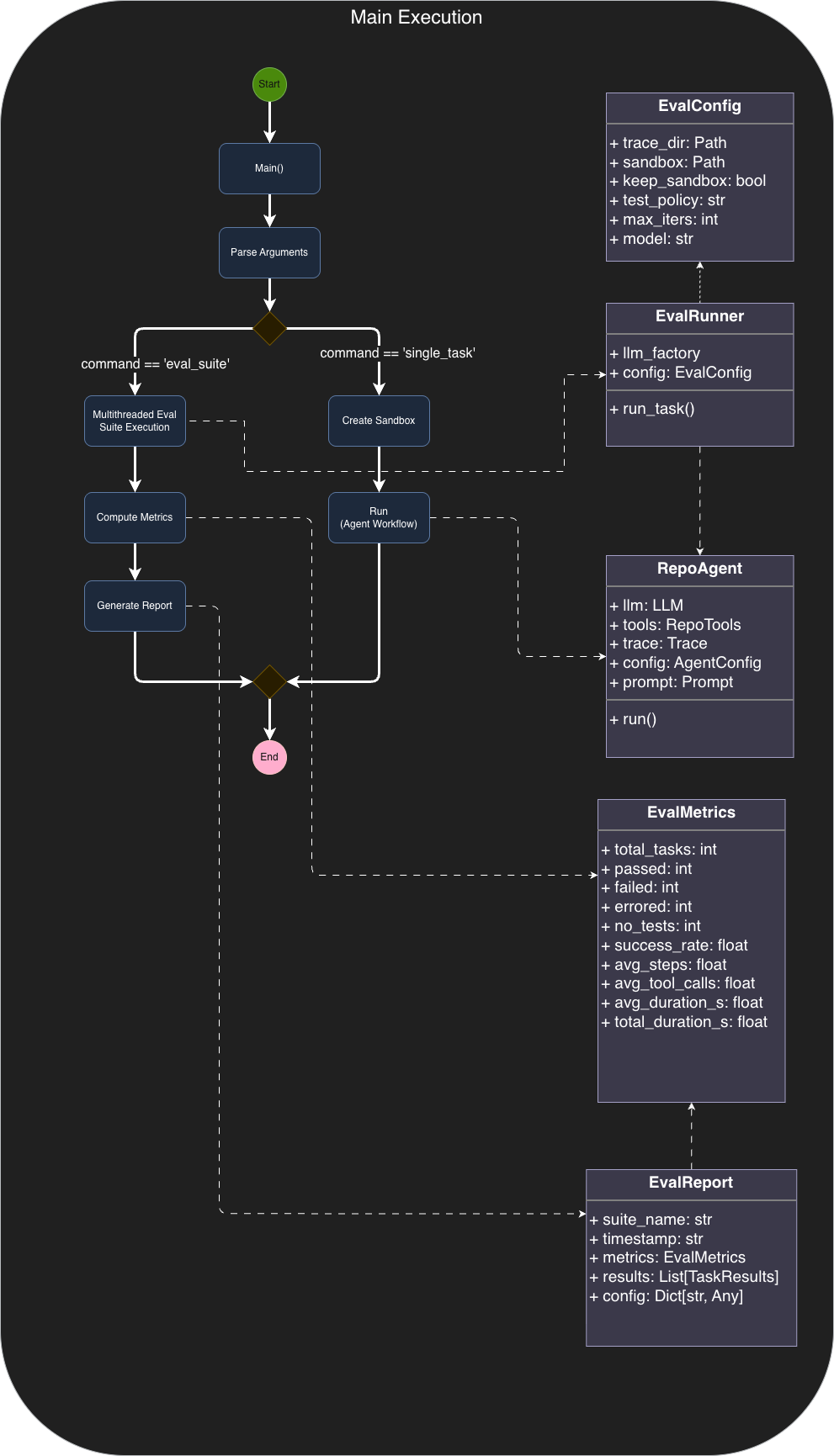

LLM Repo Agent: Bug-Fixing Code Agent

A deterministic agent system for automated bug fixing. The Python driver owns all control flow—iteration limits, tool dispatch, test execution, reflection triggers—while the LLM handles reasoning within strict boundaries.

Key engineering choices: JSON tool protocol with schema validation, multi-turn chat ChatCompletion LLM Adaptors for tool-call history reference, sandboxed repo copies for safe execution, Reflexion to correct driver loop events and failed tests, and multithreaded evaluation with Monte Carlo rollouts across task suites.

Fine-tuning pipeline: Teacher traces from GPT-4o generate step-level SFT data, followed by DPO preference pairs from pass/fail rollouts. The goal is distilling reliable tool-calling behavior into smaller open-weight models like Qwen2.5-7B.

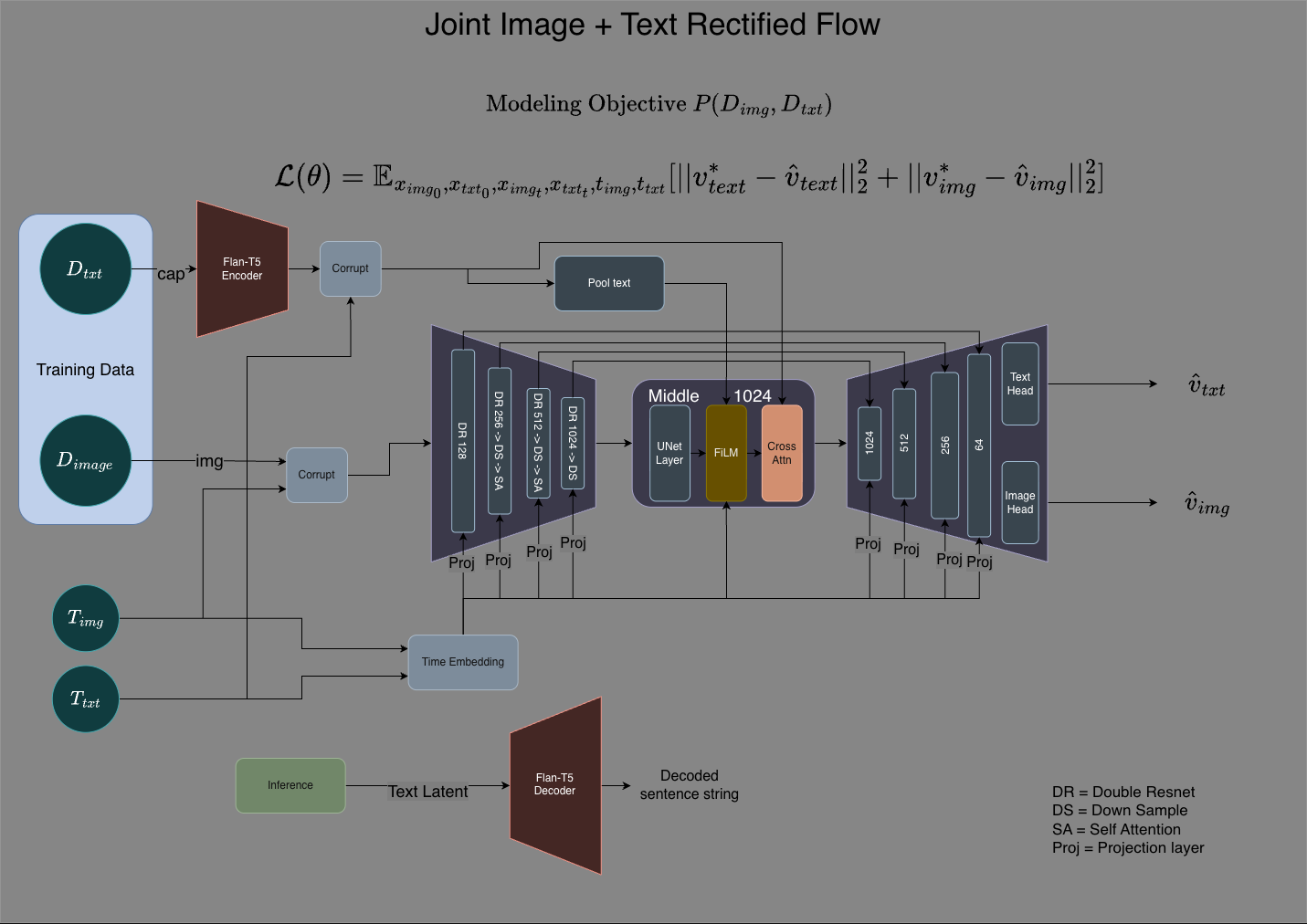

Joint Image+Text Rectified Flow Training Loop

Building a joint image and text Rectified Flow model from scratch in PyTorch. This project demonstrates the complete training loop implementation, covering the mathematical foundations and practical engineering of modern generative models.

What it covers: Rectified flow theory, velocity field parameterization, joint image-text conditioning, noise scheduling, and the training objective that enables straight-line probability paths between noise and data distributions.

Architecture Diagram

Concept Explanation

Training Loop Implementation

Inference

Experience

Industry experience at scale

Machine Learning Consultant

IndependentRemote

Machine Learning Content Creator

YouTube Channel: @JustinTheJediRemote

Senior Machine Learning Scientist

SpotterLos Angeles, CA

ML Consultant

IndependentRemote

Machine Learning Scientist

AmazonSeattle

Senior Software Engineer

CSRA IncWashington, DC

Technical Deep Dives

Building advanced ML architectures from first principles

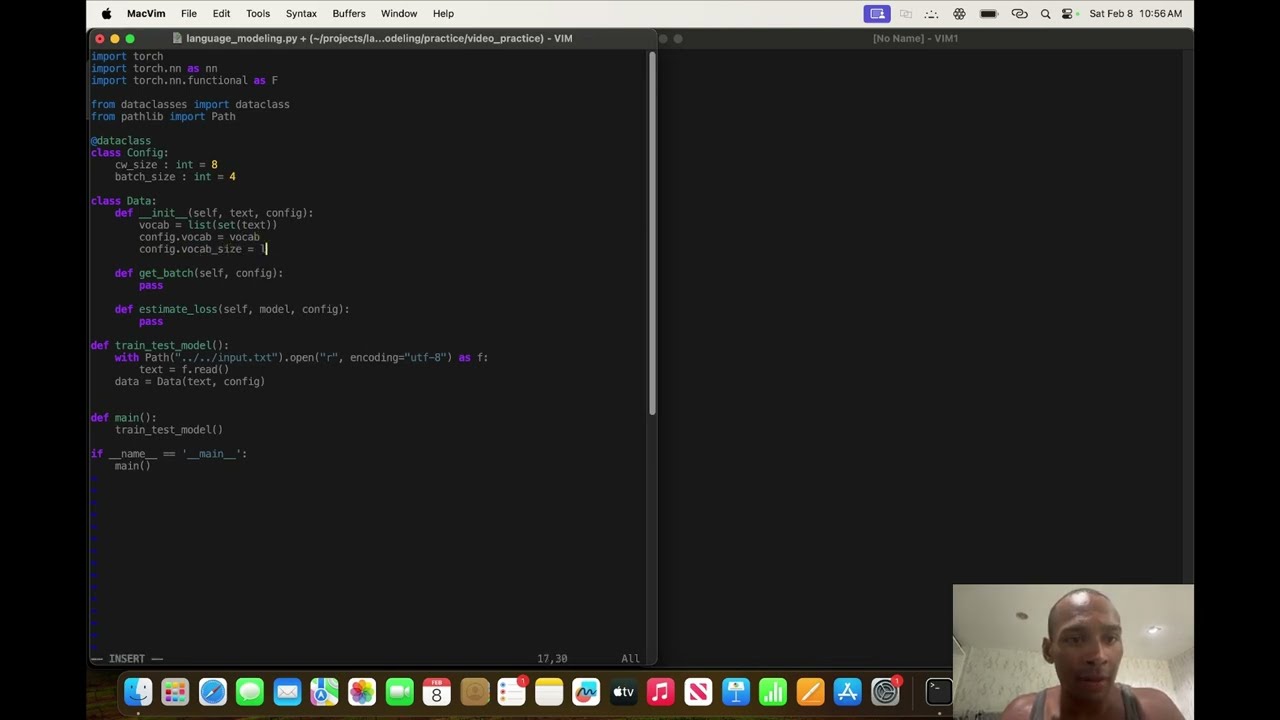

NanoGPT from scratch in PyTorch

Complete implementation of a GPT-style transformer language model, covering attention mechanisms, positional encodings, and autoregressive training.

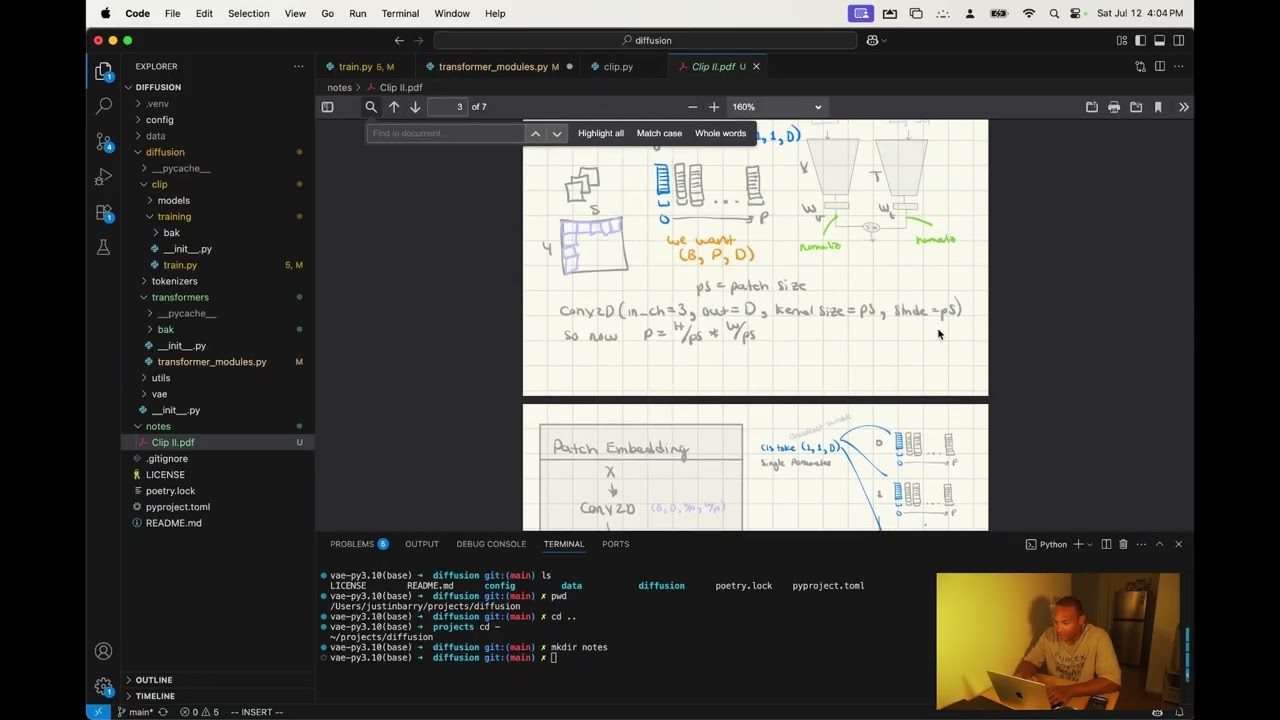

CLIP from scratch in PyTorch

Building OpenAI's CLIP model from the ground up—dual encoders for vision and language with contrastive learning objectives.

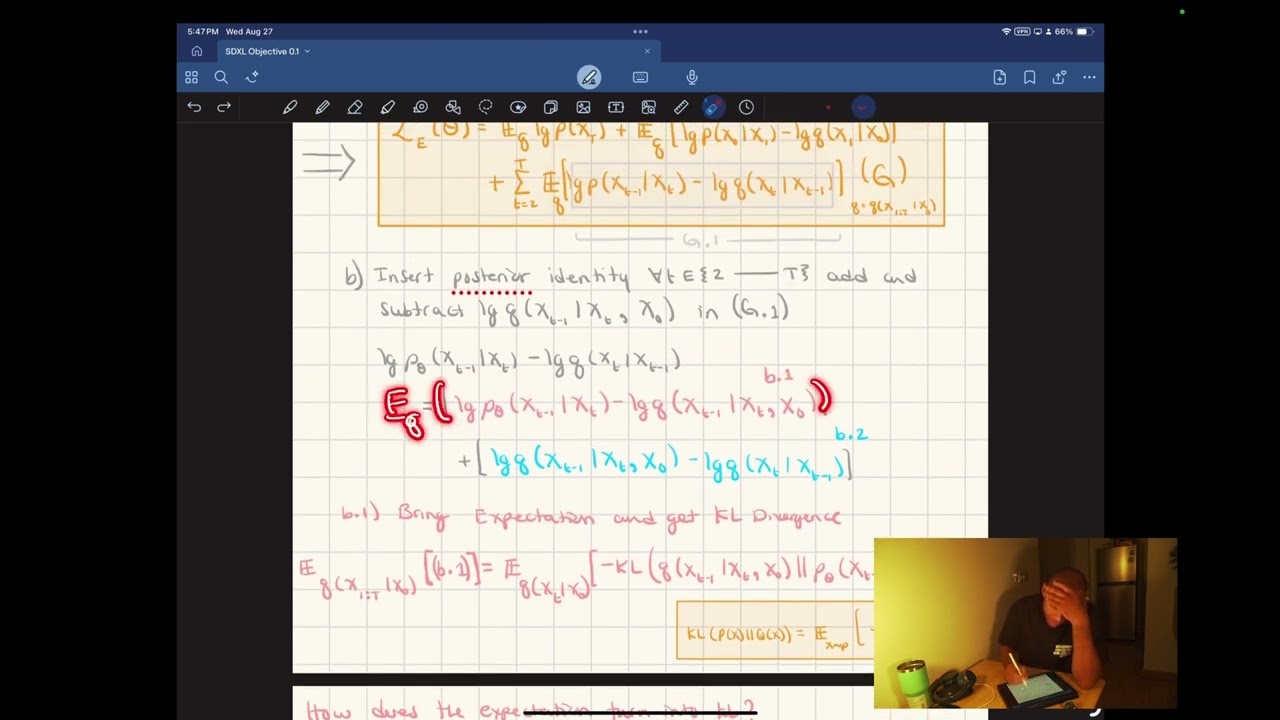

Stable Diffusion XL Objective Function Derivation

Mathematical derivation of the SDXL training objective, from variational lower bounds to practical noise scheduling strategies.

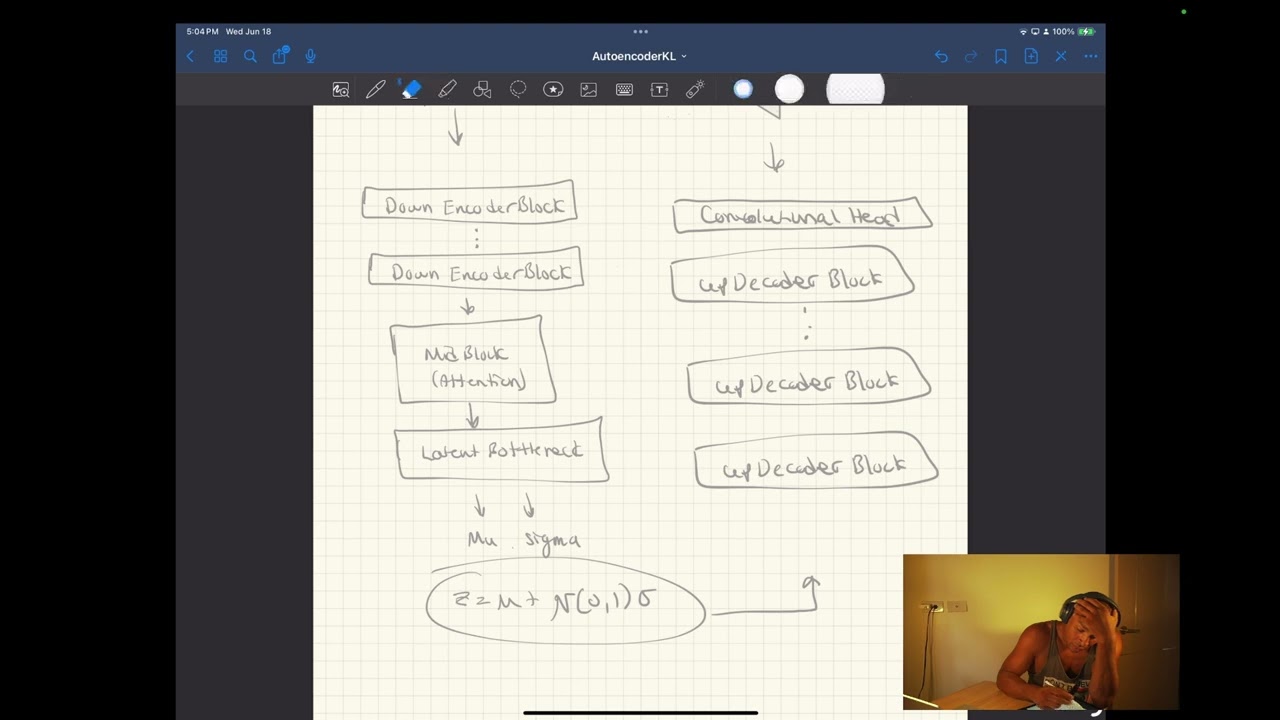

Building SDXL's AutoencoderKL from Scratch in PyTorch

Implementing the KL-regularized autoencoder used in Stable Diffusion XL—encoder, decoder, and the variational objective that enables latent space compression.

Featured Blog Posts

Deep dives into ML research and engineering

Modern LLM Post-Training Series

A comprehensive 5-part series covering PPO-RLHF, DPO, GRPO, GDPO, and practical SFT experiments. Learn the evolution of post-training algorithms and what each method removes or modifies.

JanusFlow

JanusFlow unifies image understanding and generation in a single LLM backbone using rectified flow. This post explains the architecture, training stages, representation alignment, and why the shared backbone matters.

Tiny Recursive Model (TRM)

TRM simplifies HRM by removing fixed-point theory, eliminating ACT's extra forward pass, and backpropagating through full unrolled recursion with no-grad refinement passes.

Essential PyTorch: the 5% that shows up in almost every serious model build

PyTorch looks huge from the outside. In practice, I build Transformers, CLIP, diffusion, flows, and VAEs using a small set of tensor and module patterns over and over. This post is that core.

Education

MS in Computer Science

(former PhD track)University of Central Florida

BS in Mathematics and Computer Science

Dual Major

Christopher Newport University

GEM Fellowship

National GEM Consortium